Page 54

What Is a Traceroute Test?

Posted on March 7th, 2013 by Boyana Peeva in Tools, WebSitePulse News If you're having issues with your network connection, you may need to investigate exactly where the problem is occurring. Maybe your data packets are getting stopped right out of the gate, or perhaps it's just a particular route that they're taking that is causing the issues.

If you're having issues with your network connection, you may need to investigate exactly where the problem is occurring. Maybe your data packets are getting stopped right out of the gate, or perhaps it's just a particular route that they're taking that is causing the issues.

A traceroute test shows the exact path that you're taking when you're trying to connect to a specific IP. This information tells you exactly where the breakdown happens, and it can aid you in trying to fix it.

How to Validate an E-mail Address

Posted on March 5th, 2013 by Boyana Peeva in TechEmail Ghosts

Email providers are celebrated for offering an easy, accessible, and free service, but their maximal accessibility often translates to a minimal amount of security. Unfortunately, this issue comes into play when people become faced with the question of whether or not an email address they have received is valid or not. Invalid or invented email addresses have become a communication bane for online business people, networks, and other social groups that have to rely on email to communicate with prospective clients. Additionally, users frequently find themselves wondering whether or not a mysterious email they received is legitimate or not. Illegitimate emails often act as a dangerous gateway for viruses, spyware, and phishing tools.

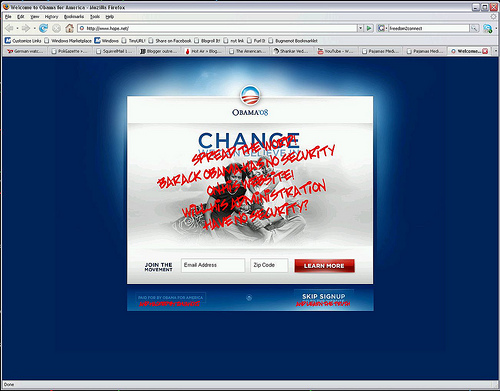

What Is Website Defacement?

Posted on March 5th, 2013 by Lily Grozeva in TechSince most of the world is living and doing business online, vandals have been forced to evolve within the digital revolution. Instead of defacing physical property, hackers are now capable of defacing businesses and other organizations' websites. By educating yourself on what is website defacement and what measures can be taken to prevent it, you could save your operation a great deal of embarrassment and stress.

Why Monitoring Your Website Matters

Posted on February 27th, 2013 by Boyana Peeva in Monitoring, Tech![]() Whether your website functions as a major part of your business model, a marketing tool, or an information portal, you want to ensure it functions as well as possible.

Whether your website functions as a major part of your business model, a marketing tool, or an information portal, you want to ensure it functions as well as possible.

Many website owners mistakenly believe that monitoring consists of determining how many visitors they receive on their websites, the number of social media followers they acquire, their keywords’ rankings, and other similar metrics.

The 10 Most Popular Blocked Websites in China

Posted on February 25th, 2013 by Boyana Peeva in Lists Users in mainland China have had their Internet censored by the government for several years now, with more than 2600 websites being blocked at one time or another.

Users in mainland China have had their Internet censored by the government for several years now, with more than 2600 websites being blocked at one time or another.

The reasons are as varied as the websites themselves, and China doesn't just block Internet websites. They monitor the Internet usage of their citizens. There are a number of people in jail - mostly journalists - on charges ranging from signing petitions to speaking out against the Chinese government.

Copyright 2000-2026, WebSitePulse. All rights reserved.

Copyright 2000-2026, WebSitePulse. All rights reserved.